Webpage Loading and Scrapping#

Sports Worked Today#

More often than not, I’ll point my request at a page and return very little of the html code. Let’s see an example!

import requests

from bs4 import BeautifulSoup

import pandas as pa

url = "https://fbref.com/en/squads/361ca564/Tottenham-Hotspur-Stats"

r = requests.get(url)

soup = BeautifulSoup(r.text)

tables = soup.find_all('table')

pa.read_html(str(tables[0]))[0]

| Unnamed: 0_level_0 | Unnamed: 1_level_0 | Unnamed: 2_level_0 | Unnamed: 3_level_0 | Playing Time | Performance | Per 90 Minutes | Expected | Per 90 Minutes | Unnamed: 29_level_0 | |||||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Player | Nation | Pos | Age | MP | Starts | Min | 90s | Gls | Ast | G-PK | PK | PKatt | CrdY | CrdR | Gls | Ast | G+A | G-PK | G+A-PK | xG | npxG | xA | npxG+xA | xG | xA | xG+xA | npxG | npxG+xA | Matches | |

| 0 | Hugo Lloris | fr FRA | GK | 35-033 | 20 | 20 | 1800.0 | 20.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.0 | 0.0 | 0.5 | 0.5 | 0.00 | 0.03 | 0.03 | 0.00 | 0.03 | Matches |

| 1 | Pierre Højbjerg | dk DEN | MF | 26-176 | 19 | 19 | 1697.0 | 18.9 | 2.0 | 1.0 | 2.0 | 0.0 | 0.0 | 1.0 | 0.0 | 0.11 | 0.05 | 0.16 | 0.11 | 0.16 | 1.6 | 1.6 | 1.0 | 2.5 | 0.08 | 0.05 | 0.13 | 0.08 | 0.13 | Matches |

| 2 | Eric Dier | eng ENG | DF | 28-013 | 19 | 19 | 1631.0 | 18.1 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.4 | 0.4 | 1.3 | 1.7 | 0.02 | 0.07 | 0.09 | 0.02 | 0.09 | Matches |

| 3 | Harry Kane | eng ENG | FW | 28-184 | 19 | 18 | 1612.0 | 17.9 | 5.0 | 2.0 | 4.0 | 1.0 | 1.0 | 3.0 | 0.0 | 0.28 | 0.11 | 0.39 | 0.22 | 0.33 | 8.9 | 8.1 | 3.3 | 11.4 | 0.50 | 0.19 | 0.68 | 0.45 | 0.64 | Matches |

| 4 | Son Heung-min | kr KOR | FW,MF | 29-204 | 17 | 17 | 1483.0 | 16.5 | 8.0 | 3.0 | 8.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.49 | 0.18 | 0.67 | 0.49 | 0.67 | 6.9 | 6.9 | 3.5 | 10.4 | 0.42 | 0.21 | 0.63 | 0.42 | 0.63 | Matches |

| 5 | Sergio Reguilón | es ESP | DF | 25-043 | 18 | 17 | 1362.0 | 15.1 | 1.0 | 3.0 | 1.0 | 0.0 | 0.0 | 3.0 | 0.0 | 0.07 | 0.20 | 0.26 | 0.07 | 0.26 | 1.4 | 1.4 | 3.3 | 4.6 | 0.09 | 0.22 | 0.31 | 0.09 | 0.31 | Matches |

| 6 | Lucas Moura | br BRA | FW,MF | 29-168 | 19 | 15 | 1334.0 | 14.8 | 2.0 | 3.0 | 2.0 | 0.0 | 0.0 | 2.0 | 0.0 | 0.13 | 0.20 | 0.34 | 0.13 | 0.34 | 3.2 | 3.2 | 2.5 | 5.7 | 0.21 | 0.17 | 0.39 | 0.21 | 0.39 | Matches |

| 7 | Oliver Skipp | eng ENG | MF | 21-134 | 18 | 14 | 1350.0 | 15.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 6.0 | 0.0 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.2 | 0.2 | 0.9 | 1.1 | 0.01 | 0.06 | 0.07 | 0.01 | 0.07 | Matches |

| 8 | Emerson | br BRA | DF | 23-014 | 15 | 14 | 1238.0 | 13.8 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 3.0 | 0.0 | 0.00 | 0.07 | 0.07 | 0.00 | 0.07 | 0.4 | 0.4 | 1.5 | 2.0 | 0.03 | 0.11 | 0.14 | 0.03 | 0.14 | Matches |

| 9 | Davinson Sánchez | co COL | DF | 25-230 | 14 | 12 | 1111.0 | 12.3 | 2.0 | 0.0 | 2.0 | 0.0 | 0.0 | 3.0 | 0.0 | 0.16 | 0.00 | 0.16 | 0.16 | 0.16 | 1.6 | 1.6 | 0.1 | 1.7 | 0.13 | 0.01 | 0.14 | 0.13 | 0.14 | Matches |

| 10 | Ben Davies | wls WAL | DF | 28-279 | 11 | 10 | 931.0 | 10.3 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 2.0 | 0.0 | 0.00 | 0.10 | 0.10 | 0.00 | 0.10 | 1.1 | 1.1 | 0.5 | 1.6 | 0.11 | 0.04 | 0.16 | 0.11 | 0.16 | Matches |

| 11 | Japhet Tanganga | eng ENG | DF | 22-303 | 11 | 10 | 735.0 | 8.2 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 4.0 | 1.0 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.4 | 0.4 | 0.4 | 0.8 | 0.05 | 0.05 | 0.09 | 0.05 | 0.09 | Matches |

| 12 | Dele Alli | eng ENG | MF,FW | 25-292 | 10 | 8 | 657.0 | 7.3 | 1.0 | 0.0 | 0.0 | 1.0 | 1.0 | 1.0 | 0.0 | 0.14 | 0.00 | 0.14 | 0.00 | 0.00 | 1.5 | 0.7 | 0.8 | 1.5 | 0.20 | 0.11 | 0.31 | 0.10 | 0.21 | Matches |

| 13 | Cristian Romero | ar ARG | DF | 23-276 | 7 | 6 | 533.0 | 5.9 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 4.0 | 0.0 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.0 | 0.0 | 0.0 | 0.0 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | Matches |

| 14 | Harry Winks | eng ENG | MF | 25-360 | 9 | 6 | 526.0 | 5.8 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 2.0 | 0.0 | 0.00 | 0.17 | 0.17 | 0.00 | 0.17 | 0.1 | 0.1 | 1.4 | 1.5 | 0.01 | 0.24 | 0.25 | 0.01 | 0.25 | Matches |

| 15 | Tanguy Ndombele | fr FRA | MF | 25-031 | 9 | 6 | 484.0 | 5.4 | 1.0 | 1.0 | 1.0 | 0.0 | 0.0 | 1.0 | 0.0 | 0.19 | 0.19 | 0.37 | 0.19 | 0.37 | 0.5 | 0.5 | 0.4 | 0.9 | 0.09 | 0.07 | 0.16 | 0.09 | 0.16 | Matches |

| 16 | Steven Bergwijn | nl NED | FW | 24-112 | 10 | 4 | 414.0 | 4.6 | 2.0 | 1.0 | 2.0 | 0.0 | 0.0 | 1.0 | 0.0 | 0.43 | 0.22 | 0.65 | 0.43 | 0.65 | 1.9 | 1.9 | 0.5 | 2.4 | 0.42 | 0.11 | 0.53 | 0.42 | 0.53 | Matches |

| 17 | Giovani Lo Celso | ar ARG | MF,FW | 25-294 | 9 | 2 | 238.0 | 2.6 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.7 | 0.7 | 0.6 | 1.4 | 0.28 | 0.24 | 0.52 | 0.28 | 0.52 | Matches |

| 18 | Ryan Sessegnon | eng ENG | DF,MF | 21-255 | 4 | 2 | 221.0 | 2.5 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.0 | 0.0 | 0.2 | 0.2 | 0.02 | 0.07 | 0.09 | 0.02 | 0.09 | Matches |

| 19 | Matt Doherty | ie IRL | DF,MF | 30-012 | 6 | 1 | 237.0 | 2.6 | 0.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.00 | 0.38 | 0.38 | 0.00 | 0.38 | 1.1 | 1.1 | 0.3 | 1.4 | 0.43 | 0.11 | 0.54 | 0.43 | 0.54 | Matches |

| 20 | Bryan | es ESP | FW,MF | 20-351 | 9 | 0 | 94.0 | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.0 | 0.0 | 0.1 | 0.1 | 0.03 | 0.07 | 0.10 | 0.03 | 0.10 | Matches |

| 21 | Joe Rodon | wls WAL | DF | 24-098 | 1 | 0 | 79.0 | 0.9 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.0 | 0.0 | 0.0 | 0.0 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | Matches |

| 22 | Brandon Austin | eng ENG | GK | 23-020 | 0 | 0 | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | Matches |

| 23 | Pierluigi Gollini | it ITA | GK | 26-316 | 0 | 0 | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | Matches |

| 24 | Dilan Markanday | eng ENG | FW,MF | 20-161 | 0 | 0 | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | Matches |

| 25 | Tobi Omole | eng ENG | DF | 22-042 | 0 | 0 | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | Matches |

| 26 | Dane Scarlett | eng ENG | FW | 17-310 | 0 | 0 | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | Matches |

| 27 | Moussa Sissoko | fr FRA | MF | 32-165 | 0 | 0 | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | Matches |

| 28 | Harvey White | eng ENG | MF | 20-131 | 0 | 0 | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | Matches |

| 29 | Squad Total | NaN | NaN | 27.0 | 20 | 220 | 1800.0 | 20.0 | 24.0 | 18.0 | 22.0 | 2.0 | 2.0 | 36.0 | 1.0 | 1.20 | 0.90 | 2.10 | 1.10 | 2.00 | 31.6 | 30.1 | 23.0 | 53.1 | 1.58 | 1.15 | 2.73 | 1.50 | 2.65 | NaN |

| 30 | Opponent Total | NaN | NaN | 27.5 | 20 | 220 | 1800.0 | 20.0 | 23.0 | 19.0 | 22.0 | 1.0 | 1.0 | 44.0 | 5.0 | 1.15 | 0.95 | 2.10 | 1.10 | 2.05 | 21.4 | 20.6 | 16.2 | 36.8 | 1.07 | 0.81 | 1.88 | 1.03 | 1.84 | NaN |

I usually have issues with this site but it worked today. Great place for all sports data!

News Source with Javascript#

Here is a scrape I did a while back that still has the issues I want to discuss. I’ve found the stripped away table from National Public Radio looking at the individuals involved in the attack at the capitol on 1/6/21. https://www.npr.org/2021/02/09/965472049/the-capitol-siege-the-arrested-and-their-stories#database

The link will take you directly to the table I am insterested in scrapping. From this page, I found where they reference the table from. This gets me around parsing through all the extra frames. It is stored as my url below. Take a look at it.

url = "https://apps.npr.org/dailygraphics/graphics/capitol-riot-table-20210204/table.html"

r = requests.get(url)

soup = BeautifulSoup(r.text)

soup.find_all('table')

[]

Looking at the developer tools there is clearly a table called, ‘riot-table’ but I get nothing on this call! Not even any tables!

This is where the big guns come in! The code below was taken from https://colab.research.google.com/github/nestauk/im-tutorials/blob/3-ysi-tutorial/notebooks/Web-Scraping/Web Scraping Tutorial.ipynb it will install the packages we need to execute the scrape of the data. The chromium is the Google chrome browser. That is how we will go to the websites via the code. selenium is the big tool. It will allow us to interact on webpage and with the javascript. Essentially the machine is opening a browser page and going to the website with the browser open!

# RUN THIS CELL WHEN USING THE NOTEBOOK ON COLAB - NO PREVIOUS INSTALLATION OF SELENIUM IS NEEDED

# install chromium, its driver, and selenium

!apt update

!apt install chromium-chromedriver

!pip install selenium

# set options to be headless

from selenium import webdriver

options = webdriver.ChromeOptions()

options.add_argument('--headless')

options.add_argument('--no-sandbox')

options.add_argument('--disable-dev-shm-usage')

# open it, go to a website, and get results

driver = webdriver.Chrome('chromedriver',options=options)

0% [Working]

Get:1 http://security.ubuntu.com/ubuntu bionic-security InRelease [88.7 kB]

Get:2 https://cloud.r-project.org/bin/linux/ubuntu bionic-cran40/ InRelease [3,626 B]

Ign:3 https://developer.download.nvidia.com/compute/cuda/repos/ubuntu1804/x86_64 InRelease

Hit:4 http://archive.ubuntu.com/ubuntu bionic InRelease

Get:5 http://ppa.launchpad.net/c2d4u.team/c2d4u4.0+/ubuntu bionic InRelease [15.9 kB]

Ign:6 https://developer.download.nvidia.com/compute/machine-learning/repos/ubuntu1804/x86_64 InRelease

Get:7 https://developer.download.nvidia.com/compute/cuda/repos/ubuntu1804/x86_64 Release [696 B]

Hit:8 https://developer.download.nvidia.com/compute/machine-learning/repos/ubuntu1804/x86_64 Release

Get:9 https://developer.download.nvidia.com/compute/cuda/repos/ubuntu1804/x86_64 Release.gpg [836 B]

Get:10 http://archive.ubuntu.com/ubuntu bionic-updates InRelease [88.7 kB]

Hit:11 http://ppa.launchpad.net/cran/libgit2/ubuntu bionic InRelease

Get:12 http://archive.ubuntu.com/ubuntu bionic-backports InRelease [74.6 kB]

Get:13 http://ppa.launchpad.net/deadsnakes/ppa/ubuntu bionic InRelease [15.9 kB]

Hit:14 http://ppa.launchpad.net/graphics-drivers/ppa/ubuntu bionic InRelease

Get:15 https://cloud.r-project.org/bin/linux/ubuntu bionic-cran40/ Packages [76.0 kB]

Get:16 http://security.ubuntu.com/ubuntu bionic-security/universe amd64 Packages [1,463 kB]

Get:17 http://security.ubuntu.com/ubuntu bionic-security/main amd64 Packages [2,517 kB]

Get:18 http://security.ubuntu.com/ubuntu bionic-security/restricted amd64 Packages [738 kB]

Get:20 https://developer.download.nvidia.com/compute/cuda/repos/ubuntu1804/x86_64 Packages [872 kB]

Get:21 http://ppa.launchpad.net/c2d4u.team/c2d4u4.0+/ubuntu bionic/main Sources [1,823 kB]

Get:22 http://ppa.launchpad.net/c2d4u.team/c2d4u4.0+/ubuntu bionic/main amd64 Packages [934 kB]

Get:23 http://archive.ubuntu.com/ubuntu bionic-updates/restricted amd64 Packages [771 kB]

Get:24 http://archive.ubuntu.com/ubuntu bionic-updates/universe amd64 Packages [2,242 kB]

Get:25 http://archive.ubuntu.com/ubuntu bionic-updates/main amd64 Packages [2,954 kB]

Get:26 http://ppa.launchpad.net/deadsnakes/ppa/ubuntu bionic/main amd64 Packages [45.3 kB]

Fetched 14.7 MB in 8s (1,960 kB/s)

Reading package lists... Done

Building dependency tree

Reading state information... Done

64 packages can be upgraded. Run 'apt list --upgradable' to see them.

Reading package lists... Done

Building dependency tree

Reading state information... Done

The following additional packages will be installed:

chromium-browser chromium-browser-l10n chromium-codecs-ffmpeg-extra

Suggested packages:

webaccounts-chromium-extension unity-chromium-extension

The following NEW packages will be installed:

chromium-browser chromium-browser-l10n chromium-chromedriver

chromium-codecs-ffmpeg-extra

0 upgraded, 4 newly installed, 0 to remove and 64 not upgraded.

Need to get 95.3 MB of archives.

After this operation, 327 MB of additional disk space will be used.

Get:1 http://archive.ubuntu.com/ubuntu bionic-updates/universe amd64 chromium-codecs-ffmpeg-extra amd64 97.0.4692.71-0ubuntu0.18.04.1 [1,142 kB]

Get:2 http://archive.ubuntu.com/ubuntu bionic-updates/universe amd64 chromium-browser amd64 97.0.4692.71-0ubuntu0.18.04.1 [84.7 MB]

Get:3 http://archive.ubuntu.com/ubuntu bionic-updates/universe amd64 chromium-browser-l10n all 97.0.4692.71-0ubuntu0.18.04.1 [4,370 kB]

Get:4 http://archive.ubuntu.com/ubuntu bionic-updates/universe amd64 chromium-chromedriver amd64 97.0.4692.71-0ubuntu0.18.04.1 [5,055 kB]

Fetched 95.3 MB in 4s (23.1 MB/s)

Selecting previously unselected package chromium-codecs-ffmpeg-extra.

(Reading database ... 155229 files and directories currently installed.)

Preparing to unpack .../chromium-codecs-ffmpeg-extra_97.0.4692.71-0ubuntu0.18.04.1_amd64.deb ...

Unpacking chromium-codecs-ffmpeg-extra (97.0.4692.71-0ubuntu0.18.04.1) ...

Selecting previously unselected package chromium-browser.

Preparing to unpack .../chromium-browser_97.0.4692.71-0ubuntu0.18.04.1_amd64.deb ...

Unpacking chromium-browser (97.0.4692.71-0ubuntu0.18.04.1) ...

Selecting previously unselected package chromium-browser-l10n.

Preparing to unpack .../chromium-browser-l10n_97.0.4692.71-0ubuntu0.18.04.1_all.deb ...

Unpacking chromium-browser-l10n (97.0.4692.71-0ubuntu0.18.04.1) ...

Selecting previously unselected package chromium-chromedriver.

Preparing to unpack .../chromium-chromedriver_97.0.4692.71-0ubuntu0.18.04.1_amd64.deb ...

Unpacking chromium-chromedriver (97.0.4692.71-0ubuntu0.18.04.1) ...

Setting up chromium-codecs-ffmpeg-extra (97.0.4692.71-0ubuntu0.18.04.1) ...

Setting up chromium-browser (97.0.4692.71-0ubuntu0.18.04.1) ...

update-alternatives: using /usr/bin/chromium-browser to provide /usr/bin/x-www-browser (x-www-browser) in auto mode

update-alternatives: using /usr/bin/chromium-browser to provide /usr/bin/gnome-www-browser (gnome-www-browser) in auto mode

Setting up chromium-chromedriver (97.0.4692.71-0ubuntu0.18.04.1) ...

Setting up chromium-browser-l10n (97.0.4692.71-0ubuntu0.18.04.1) ...

Processing triggers for man-db (2.8.3-2ubuntu0.1) ...

Processing triggers for hicolor-icon-theme (0.17-2) ...

Processing triggers for mime-support (3.60ubuntu1) ...

Processing triggers for libc-bin (2.27-3ubuntu1.3) ...

/sbin/ldconfig.real: /usr/local/lib/python3.7/dist-packages/ideep4py/lib/libmkldnn.so.0 is not a symbolic link

Collecting selenium

Downloading selenium-4.1.0-py3-none-any.whl (958 kB)

|████████████████████████████████| 958 kB 5.1 MB/s

?25hCollecting trio~=0.17

Downloading trio-0.19.0-py3-none-any.whl (356 kB)

|████████████████████████████████| 356 kB 62.0 MB/s

?25hCollecting urllib3[secure]~=1.26

Downloading urllib3-1.26.8-py2.py3-none-any.whl (138 kB)

|████████████████████████████████| 138 kB 38.5 MB/s

?25hCollecting trio-websocket~=0.9

Downloading trio_websocket-0.9.2-py3-none-any.whl (16 kB)

Requirement already satisfied: idna in /usr/local/lib/python3.7/dist-packages (from trio~=0.17->selenium) (2.10)

Collecting outcome

Downloading outcome-1.1.0-py2.py3-none-any.whl (9.7 kB)

Collecting sniffio

Downloading sniffio-1.2.0-py3-none-any.whl (10 kB)

Requirement already satisfied: attrs>=19.2.0 in /usr/local/lib/python3.7/dist-packages (from trio~=0.17->selenium) (21.4.0)

Collecting async-generator>=1.9

Downloading async_generator-1.10-py3-none-any.whl (18 kB)

Requirement already satisfied: sortedcontainers in /usr/local/lib/python3.7/dist-packages (from trio~=0.17->selenium) (2.4.0)

Collecting wsproto>=0.14

Downloading wsproto-1.0.0-py3-none-any.whl (24 kB)

Requirement already satisfied: certifi in /usr/local/lib/python3.7/dist-packages (from urllib3[secure]~=1.26->selenium) (2021.10.8)

Collecting pyOpenSSL>=0.14

Downloading pyOpenSSL-21.0.0-py2.py3-none-any.whl (55 kB)

|████████████████████████████████| 55 kB 2.3 MB/s

?25hCollecting cryptography>=1.3.4

Downloading cryptography-36.0.1-cp36-abi3-manylinux_2_24_x86_64.whl (3.6 MB)

|████████████████████████████████| 3.6 MB 46.1 MB/s

?25hRequirement already satisfied: cffi>=1.12 in /usr/local/lib/python3.7/dist-packages (from cryptography>=1.3.4->urllib3[secure]~=1.26->selenium) (1.15.0)

Requirement already satisfied: pycparser in /usr/local/lib/python3.7/dist-packages (from cffi>=1.12->cryptography>=1.3.4->urllib3[secure]~=1.26->selenium) (2.21)

Requirement already satisfied: six>=1.5.2 in /usr/local/lib/python3.7/dist-packages (from pyOpenSSL>=0.14->urllib3[secure]~=1.26->selenium) (1.15.0)

Collecting h11<1,>=0.9.0

Downloading h11-0.13.0-py3-none-any.whl (58 kB)

|████████████████████████████████| 58 kB 4.8 MB/s

?25hRequirement already satisfied: typing-extensions in /usr/local/lib/python3.7/dist-packages (from h11<1,>=0.9.0->wsproto>=0.14->trio-websocket~=0.9->selenium) (3.10.0.2)

Installing collected packages: sniffio, outcome, h11, cryptography, async-generator, wsproto, urllib3, trio, pyOpenSSL, trio-websocket, selenium

Attempting uninstall: urllib3

Found existing installation: urllib3 1.24.3

Uninstalling urllib3-1.24.3:

Successfully uninstalled urllib3-1.24.3

ERROR: pip's dependency resolver does not currently take into account all the packages that are installed. This behaviour is the source of the following dependency conflicts.

requests 2.23.0 requires urllib3!=1.25.0,!=1.25.1,<1.26,>=1.21.1, but you have urllib3 1.26.8 which is incompatible.

datascience 0.10.6 requires folium==0.2.1, but you have folium 0.8.3 which is incompatible.

Successfully installed async-generator-1.10 cryptography-36.0.1 h11-0.13.0 outcome-1.1.0 pyOpenSSL-21.0.0 selenium-4.1.0 sniffio-1.2.0 trio-0.19.0 trio-websocket-0.9.2 urllib3-1.26.8 wsproto-1.0.0

Now with all that done, I use the driver to go to the exact same website and voila we have the table!

driver.get(url)

soup = BeautifulSoup(driver.page_source, 'html.parser')

tables = soup.find_all('table')

#tables

pa.read_html(str(tables[0]))[0]

| Name | Summary | Charges | Case Updates | |

|---|---|---|---|---|

| 0 | Stefanie Nicole Chiguer New 36 years old Dracu... | Stefanie Nicole Chiguer came to the attention ... | Federal: Entering and Remaining in a Restricte... | NaN |

| 1 | Alan Fischer New 27 years old Tampa, Florida | According to court documents, Alan Fischer III... | Federal: Assaulting, Resisting, or Impeding Ce... | NaN |

| 2 | Juliano Gross New 28 years old Kearny, New Jersey | The FBI alleges that Juliano Gross documented ... | Federal: Knowingly Entering or Remaining in an... | NaN |

| 3 | Kirstyn Niemela New 33 years old Hudson, New H... | “The whole nation will watch it happen,” Kirst... | Federal: Entering and Remaining in a Restricte... | NaN |

| 4 | Jeffrey Schaefer New 35 years old Milton, Dela... | Law enforcement claimed in a court filing that... | Federal: Entering and Remaining in a Restricte... | NaN |

| ... | ... | ... | ... | ... |

| 743 | Kyle James Young 37 years old Redfield, Iowa | Federal prosecutors accused Kyle Young of assa... | Federal: Obstruction of an Official Proceeding... | Young pleaded Not Guilty to all charges |

| 744 | Philip S. Young 59 years old Sewell, New Jersey | Philip Young allegedly pushed a barricade into... | Federal: Assaulting, Resisting, or Impeding Ce... | Young pleaded Not Guilty to all charges |

| 745 | Darrell Alan Youngers 31 years old Cleveland, ... | “Non violent disobedience is how you take your... | Federal: Entering and Remaining in any Restric... | Youngers pleaded Not Guilty to all charges. |

| 746 | Ryan Scott Zink 32 years old Lubbock, Texas | On Jan. 6, Ryan Zink posted at least three vid... | Federal: Obstruction of an Official Proceeding... | Zink pleaded Not Guilty to all charges |

| 747 | Joseph Elliott Zlab 51 years old Lake Forest P... | The man who federal agents believe to be Josep... | Federal: Entering and Remaining in a Restricte... | Zlab pleaded Guilty to one charge: Parading, D... |

748 rows × 4 columns

Of course we can do much more will selenium. You can actually program it to interact with the page, clicking on links, scrolling to the bottom of the page, etc. These tools are very useful on some webpages!

Crawling Along Our Directory#

url2 = "https://www.ecok.edu/directory"

driver.get(url2)

soup = BeautifulSoup(driver.page_source, 'html.parser')

tables = soup.find_all('table')

pa.read_html(str(tables[0]))[0]

| Last Name | Department | Position | Phone | Building | Office | ||

|---|---|---|---|---|---|---|---|

| 0 | Adams, Larame | Police Department | Police Officer | 580-559-5760 | ladams@ecok.edu | Chickasaw Business & Conference Center | ECU Police Department |

| 1 | Adamson, Ashley | Housing & Residence Life | Residence Director | 580-559-5127 | aadamson@ecok.edu | Chokka-Chaffa’ Hall | 131 |

| 2 | Aguirre-Berman, Alexandra | Department of Performing Arts | Adjunct Instructor of Flute | 580-559-5736 | aaguirre@ecok.edu | Hallie Brown Ford Fine Arts Center | 146 |

| 3 | Allen, Debbie | Administration and Finance | Administrative Assistant, | 580-559-5539 | dallen@ecok.edu | Administration Building | 163 |

| 4 | Ananga, Erick | Department of Politics, Law and Society | Assistant Professor | 580-559-5413 | eananga@ecok.edu | Horace Mann | 237D |

| 5 | Anderson, Destini | Athletics | Head Softball Coach | 580-559-5363 | dfanderson@ecok.edu | Women's Athletic Facility | 119 |

| 6 | Anderson, Ty | Employment Services | Director, Assistant VP of Admin & Finance | 580-559-5217 | tydand@ecok.edu | Administration Building | 160 |

| 7 | Anderson, Reanna | Oka' Institute Public Service | Operations Assistant | 580-559-5151 | reahand@ecok.edu | Fentem Hall | 114 |

| 8 | Andrews, Kenneth | College of Health and Sciences | Dean College of Health and Sciences | 580-559-5496 | kandrews@ecok.edu | Physical and Environmental Science Center | 101A |

| 9 | Arcos, Jaime | Facilities Management | Custodian | 580-559-5377 | jarcos@ecok.edu | Physical Plant | NaN |

| 10 | Armstrong, Pamla | Child Care Resource & Referral Agency | Assistant Director | 580-559-5303 | parmstrong@ecok.edu | Fentem Hall | 131 |

| 11 | Armstrong, Darrel | Police Department | Police Officer | 580-559-5555 | darmstrong@ecok.edu | Chickasaw Business & Conference Center | NaN |

| 12 | Autrey, LaDonna | Department of Psychology | Instructor | 580-559-5328 | lautrey@ecok.edu | Lanoy Education Building | 211A |

| 13 | Baggech, Melody | Department of Performing Arts | Professor of Music | 580-559-5464 | mbaggech@ecok.edu | Hallie Brown Ford Fine Arts Center | 150 |

| 14 | Bailey, Jessika | Office of International Student Services | Director | 580-559-5252 | jbailey@ecok.edu | Administration Building | 152 |

| 15 | Bailey, Shelley | Department of Professional Programs and Human ... | Instructor | 580-559-5460 | rrbailey@ecok.edu | Horace Mann | 220 C |

| 16 | Bailey III, Riley W. | Athletics | Head Coach Women's Soccer | 580-559-5747 | rbailey@ecok.edu | Women's Athletic Facility | 117 |

| 17 | Baker, Nelaina (Lainie) | Department of Psychology | Secretary | 580-559-5319 | nelbak@ecok.edu | Lanoy Education Building | 204 |

| 18 | Baker, Amy | Office of Purchasing | Purchasing Specialist | 580-559-5264 | amycbak@ecok.edu | Administration Building | 164 |

Here I have gathered the first page of the ECU directory. Say I want to gather all the tables. I am going to click on the Link that has each letter in the alphabet and add that to my list.

from selenium.webdriver.common.by import By

elem = driver.find_element(By.LINK_TEXT, 'A')

elem.click()

soup = BeautifulSoup(driver.page_source)

table = soup.find_all('table')

df = pa.read_html(str(table[0]))[0]

df['Table'] = 'A'

df

| Last Name | Department | Position | Phone | Building | Office | Table | ||

|---|---|---|---|---|---|---|---|---|

| 0 | Adams, Larame | Police Department | Police Officer | 580-559-5760 | ladams@ecok.edu | Chickasaw Business & Conference Center | ECU Police Department | A |

| 1 | Adamson, Ashley | Housing & Residence Life | Residence Director | 580-559-5127 | aadamson@ecok.edu | Chokka-Chaffa’ Hall | 131 | A |

| 2 | Aguirre-Berman, Alexandra | Department of Performing Arts | Adjunct Instructor of Flute | 580-559-5736 | aaguirre@ecok.edu | Hallie Brown Ford Fine Arts Center | 146 | A |

| 3 | Allen, Debbie | Administration and Finance | Administrative Assistant, | 580-559-5539 | dallen@ecok.edu | Administration Building | 163 | A |

| 4 | Ananga, Erick | Department of Politics, Law and Society | Assistant Professor | 580-559-5413 | eananga@ecok.edu | Horace Mann | 237D | A |

| 5 | Anderson, Destini | Athletics | Head Softball Coach | 580-559-5363 | dfanderson@ecok.edu | Women's Athletic Facility | 119 | A |

| 6 | Anderson, Ty | Employment Services | Director, Assistant VP of Admin & Finance | 580-559-5217 | tydand@ecok.edu | Administration Building | 160 | A |

| 7 | Anderson, Reanna | Oka' Institute Public Service | Operations Assistant | 580-559-5151 | reahand@ecok.edu | Fentem Hall | 114 | A |

| 8 | Andrews, Kenneth | College of Health and Sciences | Dean College of Health and Sciences | 580-559-5496 | kandrews@ecok.edu | Physical and Environmental Science Center | 101A | A |

| 9 | Arcos, Jaime | Facilities Management | Custodian | 580-559-5377 | jarcos@ecok.edu | Physical Plant | NaN | A |

| 10 | Armstrong, Darrel | Police Department | Police Officer | 580-559-5555 | darmstrong@ecok.edu | Chickasaw Business & Conference Center | NaN | A |

| 11 | Armstrong, Pamla | Child Care Resource & Referral Agency | Assistant Director | 580-559-5303 | parmstrong@ecok.edu | Fentem Hall | 131 | A |

| 12 | Autrey, LaDonna | Department of Psychology | Instructor | 580-559-5328 | lautrey@ecok.edu | Lanoy Education Building | 211A | A |

Now that I have the A’s I’ll do a for loop and automate. Why type out all the letters when you can spend 5 minutes googling a solution?

import string

alpha = list(string.ascii_uppercase)[1:]

Now I move through the for loop. I hit a problem at “X” as there were no people in the directory there and it would not go forward so I made it go back when it hit that error.

for i in alpha:

elem = driver.find_element(By.LINK_TEXT, i)

elem.click()

soup = BeautifulSoup(driver.page_source)

table = soup.find_all('table')

df1 =[]

try:

df1 = pa.read_html(str(table[0]))[0]

df1['Table'] = i

df = df.append(df1, ignore_index=True)

except:

driver.back()

df

| Last Name | Department | Position | Phone | Building | Office | Table | ||

|---|---|---|---|---|---|---|---|---|

| 0 | Adams, Larame | Police Department | Police Officer | 580-559-5760 | ladams@ecok.edu | Chickasaw Business & Conference Center | ECU Police Department | A |

| 1 | Adamson, Ashley | Housing & Residence Life | Residence Director | 580-559-5127 | aadamson@ecok.edu | Chokka-Chaffa’ Hall | 131 | A |

| 2 | Aguirre-Berman, Alexandra | Department of Performing Arts | Adjunct Instructor of Flute | 580-559-5736 | aaguirre@ecok.edu | Hallie Brown Ford Fine Arts Center | 146 | A |

| 3 | Allen, Debbie | Administration and Finance | Administrative Assistant, | 580-559-5539 | dallen@ecok.edu | Administration Building | 163 | A |

| 4 | Ananga, Erick | Department of Politics, Law and Society | Assistant Professor | 580-559-5413 | eananga@ecok.edu | Horace Mann | 237D | A |

| ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 306 | Yoncha, Anne | Art + Design : Media + Communication | Assistant Professor of Art | 580-559-5355 | ayoncha@ecok.edu | Hallie Brown Ford Fine Arts Center | 175 | Y |

| 307 | York, Christopher | Department of English and Languages | Adjunct Instructor of English | 580-559-5471 | chrfyor@ecok.edu | Horace Mann | 329A | Y |

| 308 | Youngblood, Susan | Career Center | Director | 580-559-5890 | susryou@ecok.edu | Administration Building | 155 | Y |

| 309 | Zachary, Kimberly | Business Administration | Adjunct | NaN | kzachary@ecok.edu | NaN | NaN | Z |

| 310 | Zhang, Hongkai | Business Administration | Professor | 580-559-5561 | hzhang@ecok.edu | Chickasaw Business & Conference Center | 359 | Z |

311 rows × 8 columns

Over all I would say this is very challenging and super specific to the website you are working on. I tried many times to get selenium to find the links in many different ways. I think the agreed upon method is XPATH.

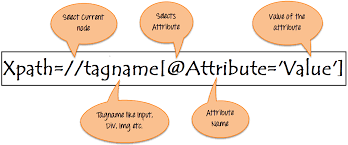

X Path#

XPath is great for finding boxes and forms on webpages (maybe links too!) You use the first call in the html to get just those elements of the page and then if needed another identifier. Here is a nice image.

Let’s see this in action on a simple page. DuckDuckGo is a search engine. We see that it has a box for entering a search. The entry box is called a ‘form’ with id ‘search_form_homepage’ I can get selenium to this by using Xpath=//input[@id="search_form_input_homepage"]

url2 = "https://duckduckgo.com"

driver.get(url2)

elem = driver.find_element(By.XPATH,'//input[@id="search_form_input_homepage"]' )

I can also get to the icon that makes it search on a path input[@id="search_button_homepage"]

Other Selenium Commands#

We used the click() command above. I think that is really cool to see the driver interacting with the web page! Let’s show you a few others that are nice.

send_keys will allow you to send information to the element you have selected! You can use this to send text or even hit certain keys on your keyboard. The one we’ll need is Keys.ENTER this hits the carriage return (yes I am that old that I still call it that!) Let me search for myself.

from selenium.webdriver.common.keys import Keys #this did take another part of selenium not yet loaded!

elem.send_keys('Nicholas Jacob')

elem.send_keys(Keys.ENTER)

So where did this get me?

driver.current_url

'https://duckduckgo.com/?q=Nicholas+Jacob&t=h_&ia=web'

What is here?

soup = BeautifulSoup(driver.page_source)

for i in soup.find_all('a', class_="result__check"):

print(i['href'])

https://www.kinopoisk.ru/name/2801548/

https://www.imdb.com/name/nm4966502/

https://www.facebook.com/nicholas.jacob1

https://ru.kinorium.com/name/2727104/

https://en.wikipedia.org/wiki/Stoneman_Douglas_High_School_shooting

https://www.film.ru/person/nicholas-jacob

https://www.tumblr.com/tagged/nicholas-jacob

https://www.youtube.com/watch?v=AwJj9D9neUo

https://www.wikitree.com/wiki/Jacob-2

https://www.listal.com/nicholas-jacob

You can also navigate the page with

refresh(), back, forward

scroll()

If you need to!

Waiting Is Hard#

Lot’s of times I am finding that my python output is behind where I am browsing. If that is the case, use a wait function to slow down the browsing. The integer here is seconds.

driver.implicitly_wait(10)

Or you could do it by a condition!

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

WebDriverWait(driver,1).until(

EC.presence_of_element_located((By.ID, "myDynamicElement"))

I found the best condition (esspecially when I am navigating the web, was the staleness_of command.

from selenium.webdriver.support.expected_conditions import staleness_of

old_page = driver.find_element(By.XPATH,'//html')

driver.find_element(By.PARTIAL_LINK_TEXT,'some_text').click()

WebDriverWait(driver, 10).until(staleness_of(old_page))

Your Turn#

Use python to browse to google. Search for something of interest and go to the first link. Return the html from that first page.